Five Minute Facts on Packet Timing

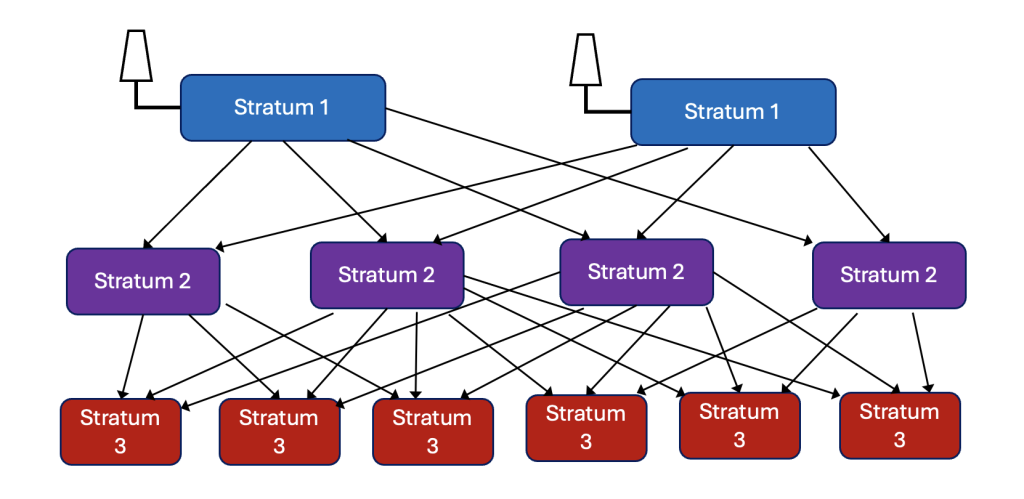

NTP deployments of larger networks usually have NTP servers connected in a tree structure, such as the one in Figure 1. Note that the Stratum 1 servers typically have a GNSS time source, but the higher Stratum servers do not. Instead, they act as both a server and a client. The point of this is to prevent the Statum 1 servers from getting overwhelmed by too many NTP requests.

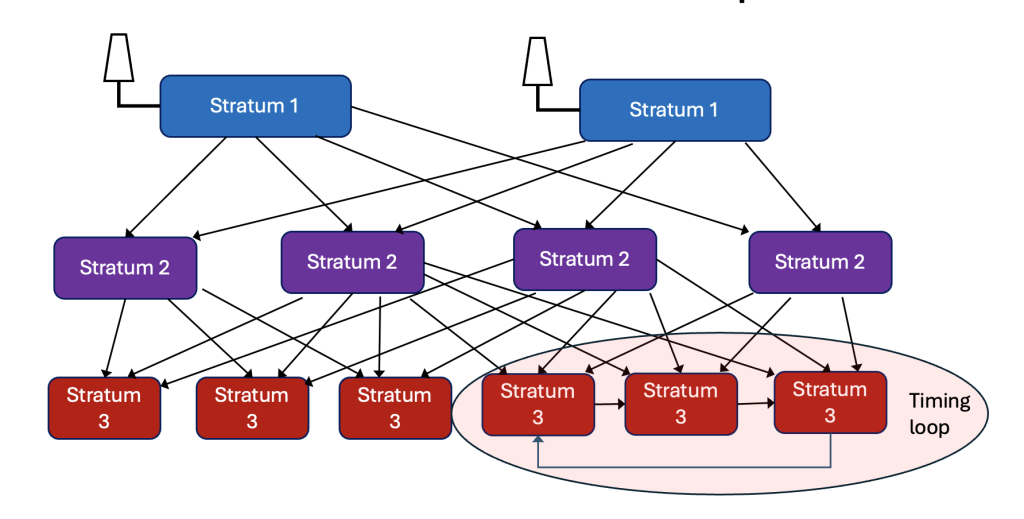

Unlike PTP, with its Best TimeTransmitter Clock Algorithm (aka BMCA), NTP servers can be organized any way the network designer wishes. Go ahead, express yourselves. However, that means that there is a possibility of creating a timing loop, as in Figure 2. And that is bad, since an NTP server that is partially synchronized to itself can end up amplifying its timing errors in a positive feedback loop. This can happen more easily than you might think, especially over time as NTP servers get added, subtracted, and reconfigured.

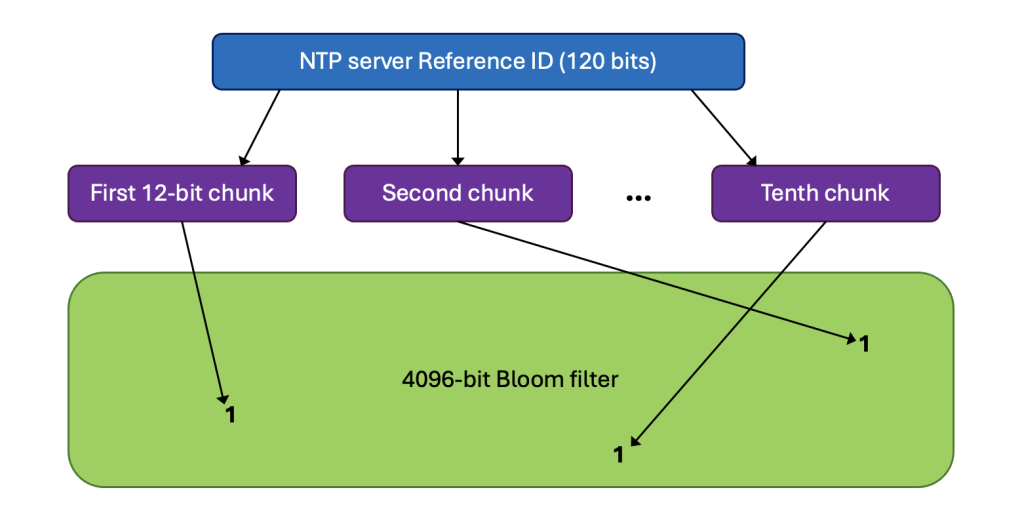

The good news is that the NTPv5 draft contains an optional loop detection mechanism using Bloom filters. You might reasonably ask, what the heck is a Bloom filter? At least I did when I first heard this. The easiest way to answer that is to explain how it works for our NTP loop detection problem. In NTPv5 the Bloom filter is specified as a 4096 sized bit field. The larger the filter, the less likely the chance of a false positive in detecting loops. There are no false negatives, i.e. loops are always detected.

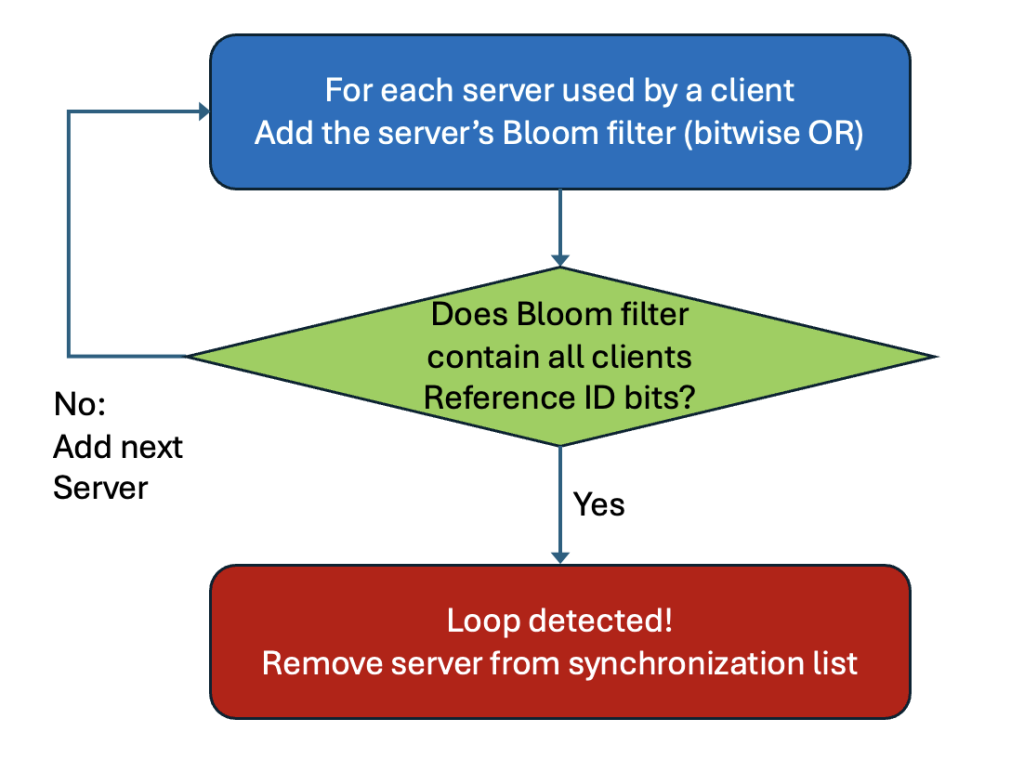

Here’s how it works. Each NTP server has a randomly generated 120-bit Reference ID. It first initializes its Bloom filter to all zeros. Then it adds its Reference ID to the filter by dividing it into 10 12-bit chunks. The value of each chunk points to a bit address in the filter, and that bit gets set to 1. Kind of confusing but perhaps Figure 3 will help to clarify the procedure. The Bloom filters for all servers used as a source of time are added using a bitwise OR. After all this the filter bits will be 1 if any of the servers Reference ID chunks point to that bit. This is the filter version that the server sends to its clients. The clients do not add its own Reference ID to its Bloom filter but adds the filters from all its servers. Each time a client adds the filters from one of its servers it can check to see if all the bits corresponding to the clients own Reference ID are set. If they are there might be time loop and that server is removed as a source. See Figure 4.

It is possible that a false positive is created if all the bits of a Reference IDs get set by some combination of other Reference IDs, or that two servers randomly generate the same Reference ID. However, both situations are unlikely, even for large NTP server trees.

If you have any questions about network timing feel free to send me an email at: doug.arnold@meinberg-usa.com.

If you enjoyed this post, or have any questions left, feel free to leave a comment or question below.