Five Minute Facts About Packet Timing

By Doug Arnold

A network architect’s job is never done. It is not enough to design the network, and see that design implemented. You need to keep checking to make sure that everything still works as the network evolves. It’s certainly true for your network-based timing system. First remember that your network is built to accommodate data. Time is an afterthought. Changes to the network, which have no effect on data, might break your timing. And the network is always changing. New applications are added which change the pattern of traffic. New network appliances are added for expansion or to replace outdated equipment. Are the applications which need timing still getting the accuracy they need? Not only do you need to know this, you might need to prove it. Prove it to government regulators, or your organization’s own internal customers. The answer to these problems is monitoring.

There are basically three levels of network time monitoring:

- Self-reported performance

- Network measurement

- Measurement with a GNSS enabled clock

Of these, number 1, self-reporting is the easiest. Nearly every device which includes a PTP slave or an NTP client has management interfaces which can report on the timing applications synchronization state and estimate of the time offset from the NTP server or PTP master. Most likely you have something more urgent to attend to than staring at the offset values of all of the clocks on your network. But you can set up notifications, for example SNMP traps, if a device loses its synchronization source, or is seeing larger than expected time offsets. Because this is easy and will catch many network synchronization problems, you should definitely do this.

Are you done? Unfortunately, not. An NTP client or PTP slave doesn’t necessarily know the correct value for its clock offset. That’s because of Asymmetry. Asymmetry is the bane of network time transfer. Both NTP and PTP assume that the network propagation delay for forward and reverse message transfer is the same. That is client to server and server to client in NTP; and master to slave, and slave to master in PTP. But those delays may not be the same due to:

- Queuing noise

- Ethernet Phys

- Cable TX/RX skew

- Errors contributed by Transparent clocks and boundary clocks in PTP, or higher stratum servers in NTP.

So why assume symmetry? Because we have to. Otherwise we don’t have enough information to solve for the client/slave offset. Unless, of course, you want to measure the asymmetry of all cables, PHYs and switches in your network. If you don’t work for CERN, then I’ll bet you can’t justify making time for that. No problem we still have two remaining levels of monitoring on our list.

We can measure the accuracy of a PTP slave or NTP client in a network, using another device which has its own source of time. Figure 1 shows how a PTP slave can be monitored by a second, backup PTP grandmaster.

Figure 1. PTP slave verification via network measurement by a backup Grandmaster capable clock.

The measurement mechanism could be NetSync Monitor, created by Meinberg, but implemented by a number of organizations, or it could use the monitoring TLVs in the upcoming update to IEEE 1588, or some other mechanism. See future posts for details on these mechanisms. Since the measurement transfers time back through the network, it is subject to the same possibility of asymmetry as the original time transfer, so we want the network path from the slave to the measurement node to be different than the path from the slave to the source of time for the network. That way we avoid common mode errors. If the error in the time transfer plus the error in the measurement are within the application timing requirements, then you can have confidence in your PTP slaves’ timing. The same approach works for NTP, and NetSync Monitor supports both PTP and NTP. Note that notifications and automated archive logs can be generated from these measurements. Because, after all, your busy.

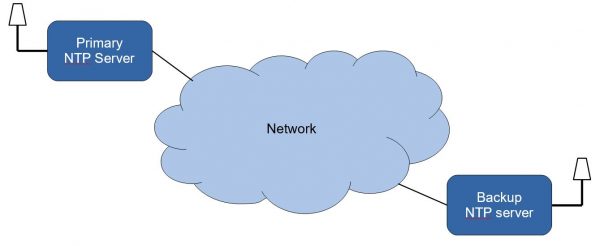

What if you want to make a measurement which is free from the perils of asymmetry. For this we turn to monitoring approach number 3. Whether you are implementing a PTP timing system, or an NTP timing system. You’ll want to have a backup clock, because, well … you want to sleep at night. You can put that backup clock in the same rack as the primary clock and connect them to the same switch. But then you still have a single point of failure, the switch, and you’re still not sleeping at night. Consider putting the backup NTP server at the other end of the network, topologically speaking, as shown in Figure 2.

Figure 2. Monitoring an NTP network, using a second GNSS enabled server with peer stats enabled.

The backup server should be placed in a position which would be the worst case accumulated network error for an NTP client. Since the backup server has a GNSS receiver, it knows the correct time and can compare it to the time received by NTP using peer stats. If the time coming in through NTP agrees with the GNSS receiver time, then most likely nearby NTP clients will also have good time. This doesn’t allow you to measure every NTP client, but you can make the measurement without network asymmetry error. Cleary a similar approach could be used in PTP, with a backup grandmaster.

There, you have it, three ways to track your network timing, so that you finally CAN sleep at night.

If you have any questions about packet timing, don’t hesitate to send me an email at doug.arnold@meinberg.de or visit our website at www.meinbergglobal.com.

Checking and monitoring our timing network is an essential thing to perform because it yields results which acts as a feedback to all of us.

Consider also that the timing system uses the data network, a network which continuously evolves over time. So a timing system which works when it is first installed, might stop working later on due to changes in network traffic, or devices on the network.